|

(17th July 2014)

|

|

In addition to a mirrored and check-summed ZFS based backup server, I wanted to have backups outside by premises to be safer against hazards such as burglary, fire and water damage. ZFS can already resist single disk failure and can repair silent data corruption, but for important memories that isn't sufficient level of protection. My ever-growing data set is currently 150k files, having a total size of 520 Gb. Amazon's Glacier seems to be the most cost efficient solution with sophisticated APIs and SDKs.

|

|

|

(7th June 2014)

|

|

From my office window I've got an unblocked size-view to the Ring Road I (Kehä I) in Espoo, Finland. It is one of the busiest roads in Finland, having up-to 100.000 cars / day. I wanted to create a program which would receive a video feed from a webcam and would process images in real time on common hardware.

|

|

|

(26th April 2014)

|

|

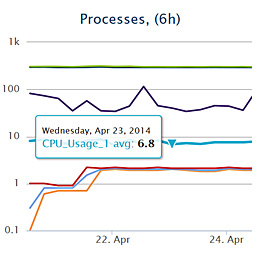

There already exists many server monitoring and logging systems, but I was interested to develop and deploy my own. It was also a good chance to learn about ElasticSearch's aggregation queries (new in v1.0.0). Originally ElasticSearch was designed to provide scalable document based storage and efficient search, but now it is gaining more capabilities. The project consists of a cron job which pushes new metrics to ElasticSearch, a RESTful JSON API to query statistics on recorded numbers and plot the results in a browser (based on HighCharts).

|

|

|

(1st December 2013)

|

|

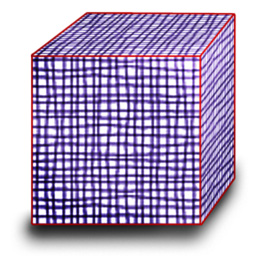

Traditional databases such as MySQL are not designed to perform well in analytical queries, which requires access to possibly all of the rows on selected columns. This results in a full table scan and it cannot benefit from any indexes. Column-oriented engines try to circumvent this issue, but I went one step deeper and made the storage column value oriented, similar to an inverted index. This results in 2 — 10× speedup from optimized columnar solutions and 80× the speed of MySQL.

|

|

|

(10th November 2013)

|

|

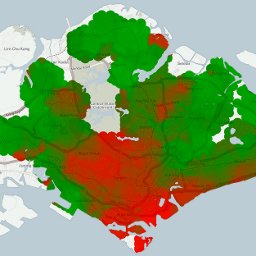

I wanted a real-time map generator to visualize regional property price changes based on chosen time interval. I didn't want to resort to pre-generated tiles, because this would prevent user-customized output and limit configuration options. To get the best performance, I implemented a FastCGI process in C++ with a RESTful interface to generate the required tiles in parallel. The resulting program can generate a customized 1280 × 720 resolution JPG in 30 milliseconds and equivalent PNG in 60 milliseconds.

|

|

|

(3rd November 2013)

|

|

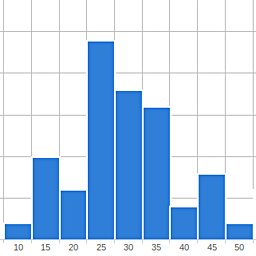

When developing RESTful APIs, it is important to know how many requests per minute the end point is able to serve. Because of my interest in Nginx, FastCGI and multi-threaded C+++, I decided to develop my own in-broser HTTP load tester which supports easy configuration, any number of parallel load-generating worker threads and real-time graphing based on jQuery powered HighCharts library.

|

|

|

(15th July 2013)

|

|

As mentioned in an other article about omnidirectional cameras, my Master's Thesis' main topic was real-time interest point extraction and tracking on an omnidirectional image in a challenging forest environment. I found OpenCV's routines mostly rather slow and running in a single thread, so I ended up implementing everything myself to gain more control on the data flow and threads' dependencies. The implemented code would simultaneously use 4 threads on CPU and a few hundred on the GPU, executing interest point extraction and matching at 27 fps (37 ms/frame) for 1800 × 360 pixels (≈0.65 Mpix) panoramic image.

|

|

|

(14th July 2013)

|

|

I got tired of manually organizing my downloads folder, and after not being happy with existing solutions I decided to write a PHP script which would enable me to easily configure new rules for file and folder management. This is achieved by having a flexible class hierarchy, so that you don't end up repeating yourself when configuring new rules and actions.

|

|

|

(13th July 2013)

|

|

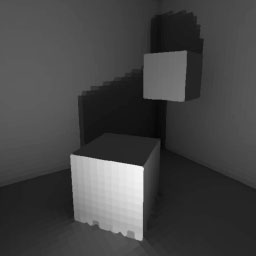

I implemented a simple global illumination algorithm, which constructs and solves the sparse matrix which describes how much tiles reflect light to each other. It supported only gray-scale rendering and wouldn't scale up to bigger scenes, but it was nevertheless an interesting project and I learnt a lot about 3D geometry, linear algebra and computer graphics. Graphics was drawn by a software renderer which uses only standard SDL primitive draw calls.

|

|

|

(13th July 2013)

|

|

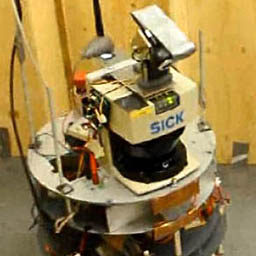

At spring 2012 I did a course in robotics, which involved programming a semi-automatic robot which could fetch items from pre-determined locations and return them back to correct deposit bins. I had a team of four people, and I solved the problems of continuous robust robot localization, task planning and path planning. Others focused on the overall source code architecture, state-machine logic, closed loop driving control and other general topics. The used robot can be seen in Figure 1.

|

|

|

(10th July 2013)

|

|

A good presentation about hyphenation in HTML documents can be seen here, but it is client side (JavaScript) oriented. Basically you shouldn't use justified text unless it is hyphenated, because long words will cause huge spaces between words to make the line stretch out the whole width of the element. I found a few PHP scripts such as phpHyphenator 1.5, but typically they weren't implemented as a single stand-alone PHP class. Since the underlying algorithm is fairly simple, I decided to write it from scratch.

|

|

|

(9th July 2013)

|

|

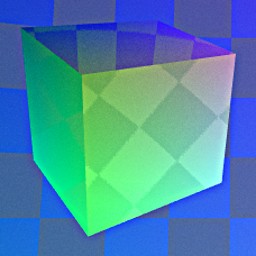

So far I've written a basic rendering engine which uses Nvidia's CUDA (Compute Unified Device Architecture) which can render reflective surfaces with environmental mapping and anti-aliasing and motion blur at 200 fps with minimal usage of 3rd party libraries such as OpenGL. This let me fully implement the cross-platform rendering pipeline from data transfer to pixel-level RGB calculations, all in C-like syntax.

|

|

|

(7th July 2013)

|

|

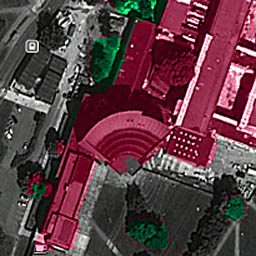

During the summer of 2012 when I was mainly working on my Master's Thesis, I also had a look at National Land Survey of Finland's open data download service. There I downloaded a point cloud dataset which had typically 4 - 5 measured points / square meter. This means that to visualize a region of 2.5 × 2 km, I had to work with a point cloud consisting of 5 × 2500 × 2000 → 25 million points. I chose to concentrate on my campus area, because I know it well and it has many interesting landmarks. For example the iconic main building can be seen in Figure 1.

|

|

|

(7th July 2013)

|

|

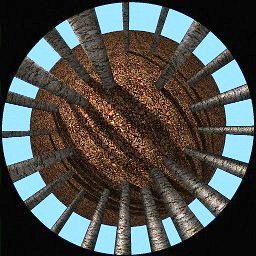

As I mentioned in the previous article about omnidirectional cameras, my Masters of Science Thesis involved the usage of this special kind of imaging system which consists of a traditional camera lens and a concave mirror, which provided 360° × 90° Field of View. It was ordered from Japan and there was some delay in the delivery, so meanwhile I wrote an all-Matlab script to simulate this system's properties, calibration and panorama generation in practice.

|

|

|

(6th July 2013)

|

|

My Masters of Science Thesis involved the usage of a so-called "omnidirectional camera". There are various ways of achieving 180° or even 360° view, with their distinct pros and cons. The general benefit of these alternative camera systems is that objects don't need to be tracked, because generally they stay withing the extremely broad Field of View (FoV) of the camera. This is also very beneficial in visual odometry tasks, because landmarks can be tracked for longer periods of time.

|

|