As I mentioned in the previous article about omnidirectional cameras, my Masters of Science Thesis involved the usage of this special kind of imaging system which consists of a traditional camera lens and a concave mirror, which provided 360° × 90° Field of View. It was ordered from Japan and there was some delay in the delivery, so meanwhile I wrote an all-Matlab script to simulate this system's properties, calibration and panorama generation in practice.

The rendering geometry is based on a single infinite ground plane, and a few dozen infinite cylinders which represent tree trunks. The whole rendering script is only 500 lines of Matlab code, but it has significant complexity because of the way it uses sparse matrix algebra to do fast texture lookups and avoid for loops, which generally perform badly in Matlab. This also makes the code automatically multithreaded, because Matlab can use several cores to execute matrix operations.

After setting up the camera parameters and position, ground plane equation has been determined and tree trunks have been generated, the script proceeds to render the image in four smaller tiles, which are later stitched together. The reason is that otherwise we might run out of memory, especially if the calculations are executed using GPU primitives.

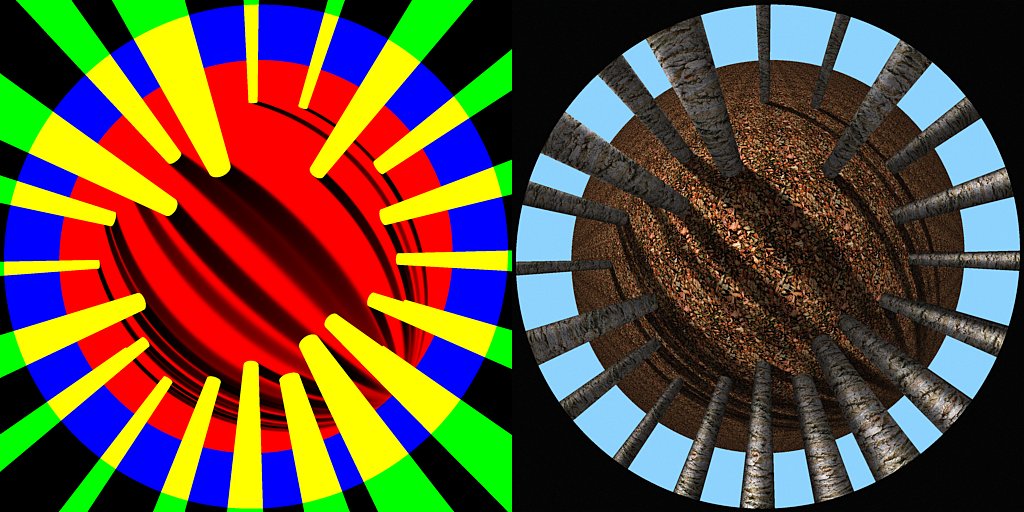

At first the light rays are casted for each image pixel, and their first collision with the mirror's paraboloid surface is determined. To make the model more realistic, also lens distortion is simulated. After the collision with the mirror is calculated, the ray is reflected based on the mirror's local normal vector. Then this origination point and direction are transformed from camera's local coordinate system to world coordinates. Then their collision location with the ground plane is simultaneously calculated for all of the pixels within the current tile, using standard linear algebra. If the tile is 1024 × 1024 pixels, the matrix A in A x = b has a size of (3 × 10242) × (3 × 10242) → 1013 elements! But since only 5 × 10-5% of them are non-zero, solving this takes only about one second. The end result is the coordinates (3 × 1024 × 1024 elements) in which the rays hit the ground plane, which are used to sample the ground plane's texture. The used texture can be seen in Figure 1 on left. Also those pixels are ignored which would be reflected to an angle which outside the set bounds, and this produces the dark perimeter to the image. In these renderings there was no lower limit for the angle, meaning that the camera could see ''through itself'' to the ground directly under it.

Next we need to iterate through each tree and check it for two things. The first step is to calculate that to which pixels this tree would be casting a shadow, based on directional light source's direction. If this occurs, a rather complex formula is valuated to determine the amount of resulting shadowing. This takes into account the trunk's diameter, positions distance from the trunk and any previously casted shadows. Shadows are drawn before tree trunks, to make sure that they wouldn't incorrectly shadow them. The resulting shadows are very convincing, mimicking soft shadows' well known properties.

At the seconds step we check that in which pixels the tree trunk is visible, by solving the closest distance from cylinder's surface to each casted ray. Since the cylinders have an infinite length, in theory we would see them also underground. That is why we need to confirm that this distance is smaller than the ground plane's distance. If this is the case, then this distance is recorded for future use.

These are the most time consuming steps, taking 11 seconds for a tile of 1024 × 1024 pixels when 32 trees are present. This could be optimized from the naive O(n × t) (where n is the number of trees and t is the number of tiles) closer to O(n), by ignoring those trees which can be determined not to be visible in the current tile, and not to cast any visible shadows there.

Once distances to trees have been calculated, the ray-cylinder collision locations are calculated. Then the trees' surfaces' normals can be determined, which are randomly permuted to create a more realistic result. After this the reflected ray directions are calculated, which are combined with the light source's direction to determine the amount of shadowing or sun ray reflection, which is applied to the tree trunk texture.

As the final step the sky is added, which is a simple color replacement for those pixels which did not hit a tree and cannot see the ground plane, or which are too horizontal and see the ground plane very far away. The final outcome can be seen in Figure 2. The colors on the left indicate that which geometry affected each pixel's final color. Textured models can be seen on the right. In this case the camera would be looking upwards, in which case the mirror reflects the rays at the middle of the image directly down towards the ground. Rays closer to image border are sent upwards, and the circular perimeter occurs from the limitation of maximum inclination angle to 20°. The light is originating from the top-left corner.

Related blog posts:

|

|

|

|

|

|

|

|

|

|