At spring 2012 I did a course in robotics, which involved programming a semi-automatic robot which could fetch items from pre-determined locations and return them back to correct deposit bins. I had a team of four people, and I solved the problems of continuous robust robot localization, task planning and path planning. Others focused on the overall source code architecture, state-machine logic, closed loop driving control and other general topics. The used robot can be seen in Figure 1.

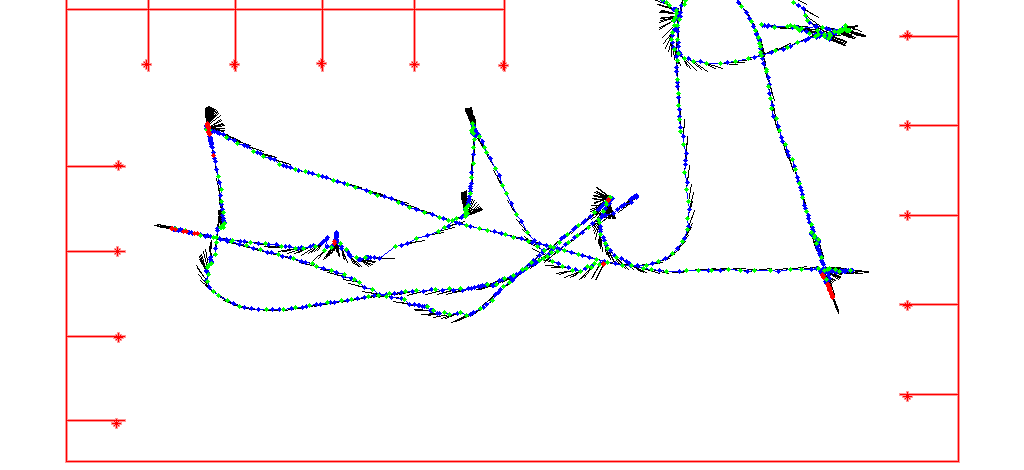

The initial path planning is done by utilizing a pre-drawn bitmap, in which each pixel corresponds to 2 cm in real life. This map represents the allowed positions in which the robot is allowed to move, and extra padding is added to account for robot's physical size. A few of these maps can be seen in Figure 2. Using this discrete map presentation it is fairly straight forward to find the shortest path by using for example A* search. But in practice the shortest path might not be the fastest to drive through, especially when the path has tight turns. Also it doesn't take the robot's final heading into consideration.

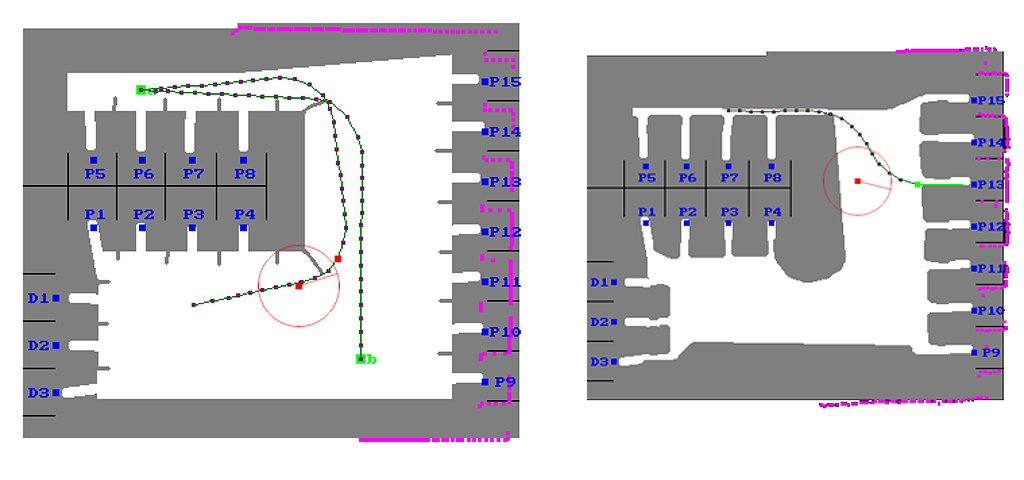

To improve the path, a gradient descent algorithm is used to minimize a better cost function. The total cost of the path is the weighted sum of its length, curvature and the final heading error. The starting and ending locations cannot be changed, but all other way points are free to to be adjusted. The gradient descend iteration starts by numerically estimating ∂⁄∂pi f(p1, p2, ..., pn), where i = 2, ..., n-1, and pi is the location of the ith way point. However this parametrization isn't ideal for the optimization process.

To make it easier to implement the optimization algorithm, each way point's adjustment is parametrized by a single scalar, which indicates the way point's offset from its current location, orthogonal to the path's current direction. This is illustrated in Figure 3. Once each of the partial derivatives ∂⁄∂lif(p1, p2 + l2 n2 , ..., pn) are calculated, the descend step can be performed towards the direction in which the cost function decreases the fastest. Some extra logic is needed to determine the step length and a suitable stopping criteria. Also it must be ensured that the refined path does not go through a forbidden region in the map. The optimized path is a lot faster to drive through than the shortest path, because the robot can drive broader arcs at higher speeds, and reach the end point at already correct heading.

The implemented localization algorithm is quite interesting as well. The robot has a rotatory encoder for both wheels, so we have odometry data available. However it accumulates errors quite fast, and also the robot's initial position should be known. In addition it cannot recover from the robot being manually moved. This is why it is better to utilize the laser scanner's measurement data, which is the distance measurement from ±90°, returning 181 readings in total, with an accuracy of a few centimetres.

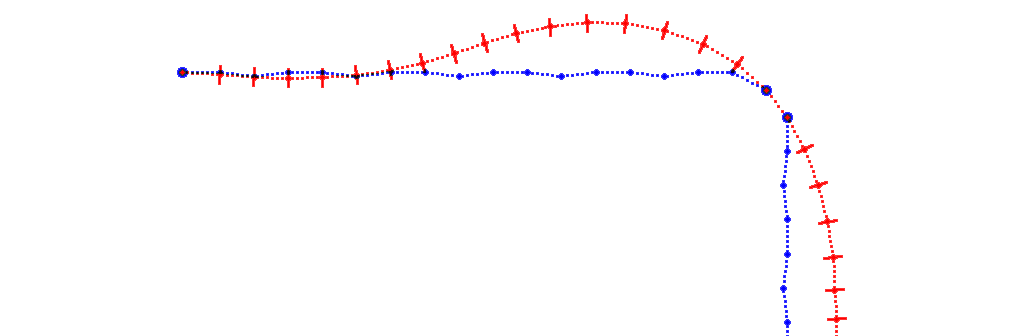

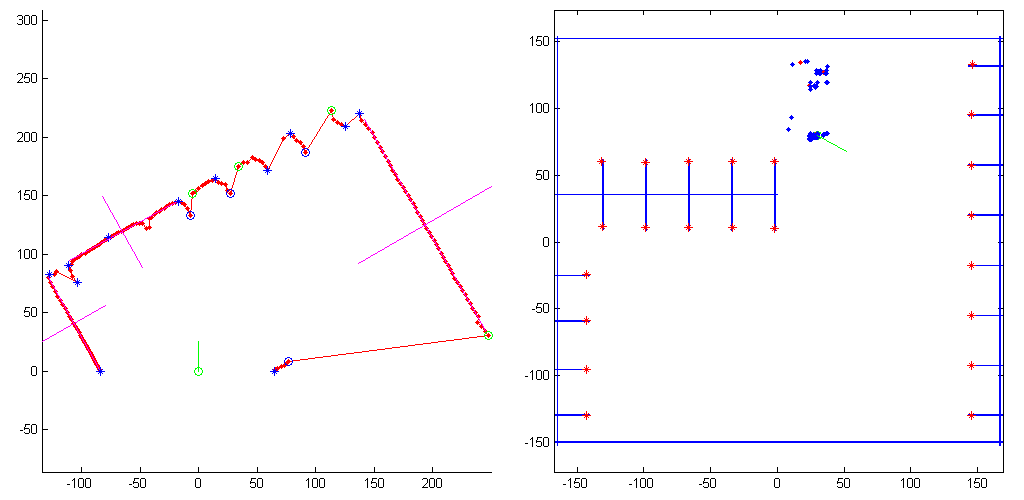

The most advanced solution would be to implement a SLAM (Simultaneous Localization And Mapping) algorithm, which can build a map of the unknown region on-the-fly, while using the same map to do localization. To make the algorithm robust, it should implement features such as loop closing and filter erroneous data. In our project the environment was static and known in advance, so I decided to base the localization algorithm on known landmarks such as corners and walls. These are relatively easy to be robustly detected from laser readings, and an example reading is shown in Figure 4. An other alternative approach would have been to use a particle filter or Kalman filter, but these are non-trivial to implement and debug, to make sure you'll never report an incorrect location.

The actual localization algorithm works by using groups of a few landmarks, and trying to find a similar configuration from the map. For example one corner is not enough to determine the location, but two corners is already sufficient, if we knew that which two corners of the map those corners match to. Similarly a single point and a wall are sufficient for this purpose. The algorithm makes hypotheses about these correspondences, and if the resulting location and orientation is somewhat consistent with the laser readings (for example no walls are observed at the known empty regions of the map), a vote is casted for this candidate location. Clusters of these votes can be seen on the right of Figure 4. The cluster with most votes is then determined, and that is the final location estimate.

The robot cannot rely only on the laser based localization, because the robot might be facing a wall, in which case there might not be sufficient landmarks available for unambiguous localization. It is crucial that the robot knows its position at all times, because that is an integral part of the closed loop control logic which determines the control signals for the wheels, to drive the robot along a planned path. To overcome this problem, I decided to always rely on the odometry based position estimate instead.

When the robot is moving and the laser based localization has been successful for the past one meter or so, accumulated errors in the odometry can be fully compensated for. So in practice the incorrect odometry estimate is transformed into correct world coordinates, and this mapping is re-calibrated always when possible. This combined algorithm has benefits of both sensor systems, and works reliably in all scenarios. If the robot is moved or the system is re-started, the calibration is done by just driving a few meters.

Example results of this algorithm are shown in Figure 5. The robot was driven around by using manual control, and the data was logged into a file. Later this file was read by a C++ program, which executes the localization algorithm and prints out the output into a file. Then this file was read into Matlab, in which the estimated path was plotted. Red dots on the path indicate locations in which the laser data could not be used to unambiguously determine the location. It is also seen that the location estimates don't jump around, and instead the drawn paths are very smooth. This is due to using the odometry based location as a starting point, and using the laser based location only for correcting odometry's errors.

Related blog posts:

|

|

|

|

|

|

|

|

|

|